Deciphering Ollama model names

If you're new to Ollama, you may be confused by model names. Can you tell the difference between:

llama3.1llama3.1:latestllama3.1:8bllama3.1:8b-instruct-q4_0llama3.1:8b-instruct-q4_K_Mllama3.1:8b-text-q4_0songfy/llama3.1:8b

If not, you will be able to after reading this post.

What's in a name?

An Ollama model name is made up of three parts, two of which may be implicit.

Model namespace

The first, potentially implicit part, is the namespace, which precedes the model name, and ends in a slash (/). If it is not provided, the implicit default is library/. This means that ollama pull library/llama3.1 and ollama pull llama3.1 are equivalent.

The default library namespace belongs to Ollama, meaning that only maintainers can push to it. That means that all the models in the model library are maintained by the team behind Ollama (even if they are published by other companies such as Google, Meta, Mistral, etc.)

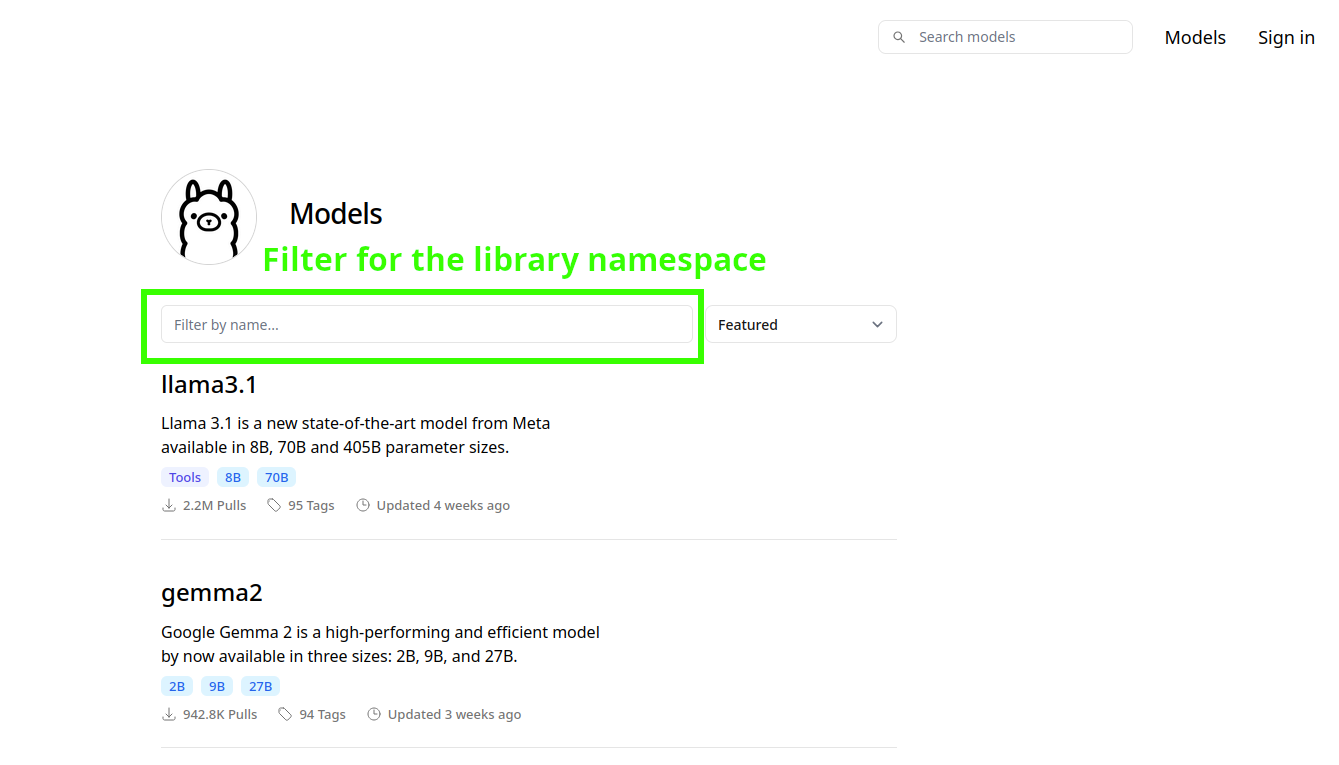

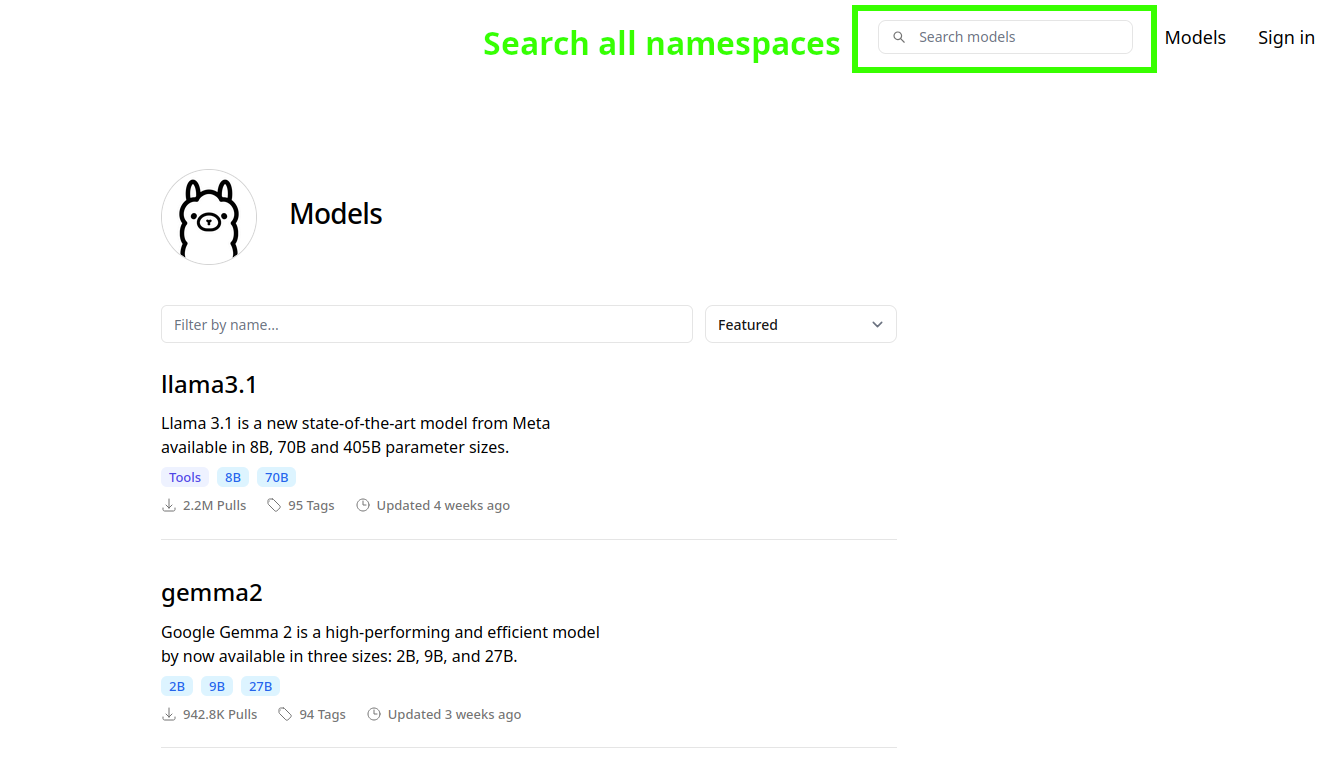

When you enter text in the Filter by name... box on the Ollama Library, this filters the offical library namespace.

When you sign-up to the Ollama website, your username becomes your namespace. If you would like to publish your own model, you should therefore namespace them. For example, in my case, ollama create theepicdev/nomic-embed-text:v1.5-q6_K allowed me to publish the nomic-embed-text model to https://ollama.com/theepicdev/nomic-embed-text.

To search through all namespaces, including other users' namespaces, enter your query in the top-right search box on the Ollama site.

Model name

The second part is the model name itself. This part is required, and also fairly simple. For the models llama3.1, library/llama3.1, and llama3.1:latest, the name is llama3.1.

Model tag

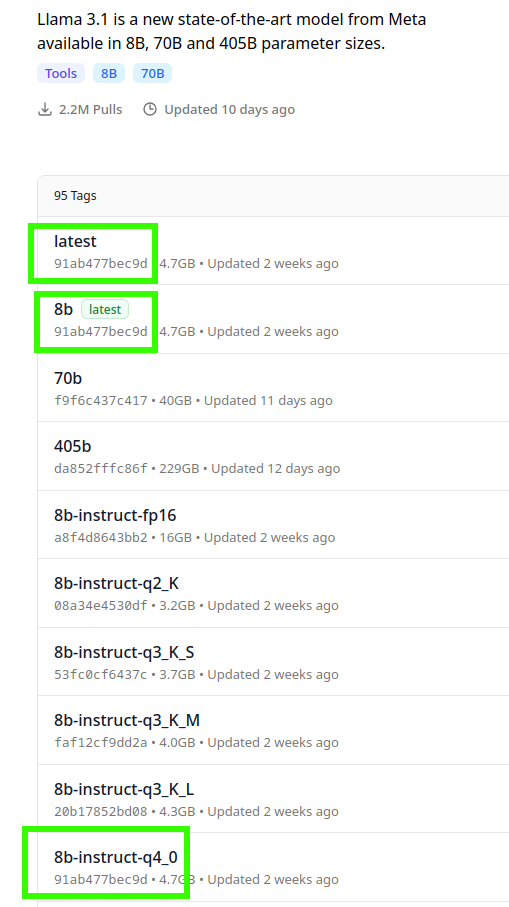

The last part is the tag. It is also potentially implicit, in which case it defaults to latest. It follows the model name and a colon (:). For example, the tag is latest in llama3.1:latest, and 8b in llama3.1:8b.

The tag can contain any valid text, including alphanumeric characters, periods, dashes, and underscores.

Tags often contain useful information such as the parameter count, training, and quantization. For instance, the llama3.1:8b-instruct-q4_0 tag tells us that the model has 8 billion parameters, has been fine-tuned to follow instructions, and has q4_0 quantization, while the llama3.1:8b-text-q4_0 is a pre-trained variant of the same model without extra fine-tuning.

If you want to chat with a model, you typically want a model with the chat, instruct, or it fine-tune. base or text variants can generate text, but are not very good at following instructions, and are not suitable for building chatbots or intelligent assistants. Likewise, a code variant can be used for code-completion with tools like LSPs, but are not good at discussing code.

Model IDs and aliased tags

Note that certain tags are aliases for other tags. As of this writing, the llama3.1:latest, llama3.1:8b, and llama3.1:8b-instruct-q4_0 all share the same id, 91ab477bec9d. This means that all three tags share the exact same weights, parameters, system prompt, etc. They are the exact same model.

This is also a good way to check whether your model needs to be updated. Sometimes, weights, templates, or parameters get updated to improve the model's performance. You can check whether the ID shown when running ollama ls matches the ID on the website. If you see that they differ, and especially that your copy is older than the last updated time on the repository, you can run ollama pull again to update the model to the latest version.

Aug. 23, 2024, 3:56 p.m.

Tags